To have an opinion on something, you must first understand the subject thoroughly. It is a timeless and simple rule: knowledge first, opinion second. If we skip the first step, what comes out isn’t really an insight – it’s just noise.

When people talk without knowledge, they mislead others, and often themselves too. They sound sure, but underneath that certainty is mostly guesswork. Still, many continue to speak before they learn. They are everywhere, on streets, at cafes, in politics. What’s worse is that some of them don’t even know there’s a rule to follow. They don’t know what they don’t know. It is sad.

The knowledge-first-opinion-second principle really matters. Especially now, when truth feels like a moving target and facts get twisted. And it matters even more when it comes to the platforms we scroll through every day.

Each generation has its favorite platforms. According to Pew Research and Statista, baby boomers tend to stick with Facebook and YouTube. Millennials are more drawn to X, Instagram and YouTube, while Gen Z, aka zoomers, prefers TikTok, YouTube Shorts and Instagram Reels. But no matter your age, the data makes it clear that social media is where so many of us go to make sense of the world, whether it’s about health, politics, the economy or society at large. And that’s fine. But when subjectivity is not handled with care and drifts too far from the facts, that’s where things start to get messy. Or even dangerous.

People deserve better. They deserve honesty. They deserve to know what’s truly happening before they’re pulled into someone else’s narrative. Because subjectivity isn’t the problem, it’s how we use it.

In January 2024, DataReportal reported that there are 5.04 billion social media users around the world (62.3% of the global population). On average, each user spends about two hours and 24 minutes on platforms every day. Asia leads with the highest number of users (around 2.5 billion), while Oceania has the smallest share, with 25 million users who spend roughly 1.9 hours daily online. According to Meta’s latest report, Facebook leads the way with 3.05 billion monthly active users, making it the most widely used platform. Statista confirms this top spot, with YouTube, WhatsApp, Instagram and TikTok respectively rounding out the rest of the top five.

We could go deeper into the percentages and statistics, but what’s really important here is not crunching stats, but rather reflecting on how social media tools have become such a central part of our daily lives, regardless of our age or where we live, when we consider the global population.

So, how did we end up here – in a world where everyone seems to be online – is the result of the unstoppable evolution of technology. Right now, that evolution is speeding ahead toward AI, which, while full of potential, also brings us dangerously close to a major risk: a flood of misinformation and disinformation.

Risk correlations

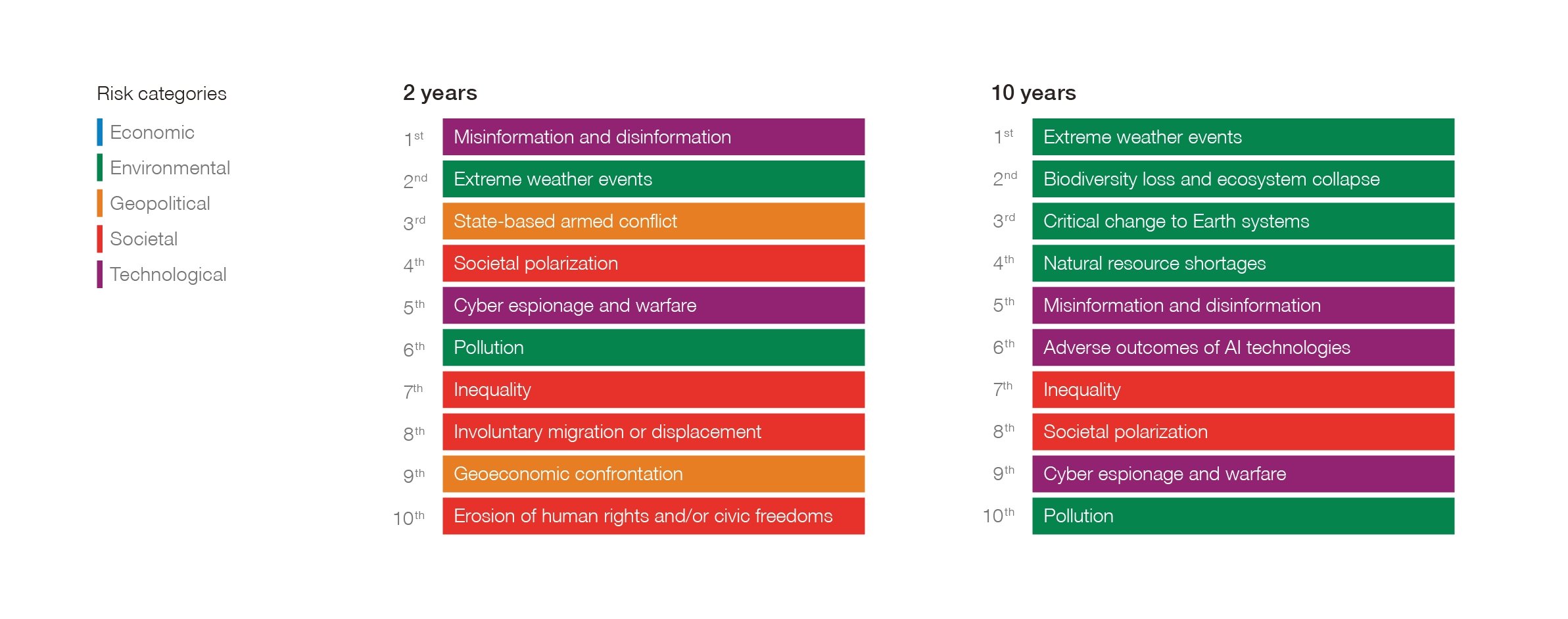

The World Economic Forum (WEF), in its annual “Global Risks Report 2025,” issued a pretty eye-opening warning. It says that misinformation and disinformation will remain a “top risk” until 2027, only to be surpassed by “extreme weather” in the next decade. “Misinformation and disinformation vs. extreme weather”? It is terrifying.

In the preface of this historic report, Saadia Zahidi, the WEF’s managing director, described misinformation and disinformation as “the top-ranked short- to medium-term concern across all risk categories.” For Zahidi, there’s a clear connection between the threat of misinformation and disinformation and the rise of generative AI, which is used to create false or misleading content “that can be produced and distributed at scale.”

The report also links “the geopolitical environment” to the correlation between AI and misinformation and disinformation. It further highlights another interconnection, directly tied to conflict zones, where the evolution of AI makes it increasingly difficult to distinguish between AI-generated and human-generated misinformation and disinformation. This is a key reason why the WEF considers conflict zones to be more vulnerable to further unrest.

The WEF simply lists as this:

- “Rising use of digital platforms and a growing volume of AI-generated content are making divisive misinformation and disinformation more ubiquitous.”

- “Algorithmic bias could become more common due to political and societal polarization and associated misinformation and disinformation.”

- “Deeper digitalization can make surveillance easier for governments, companies and threat actors, and this becomes more of a risk as societies polarize further.”

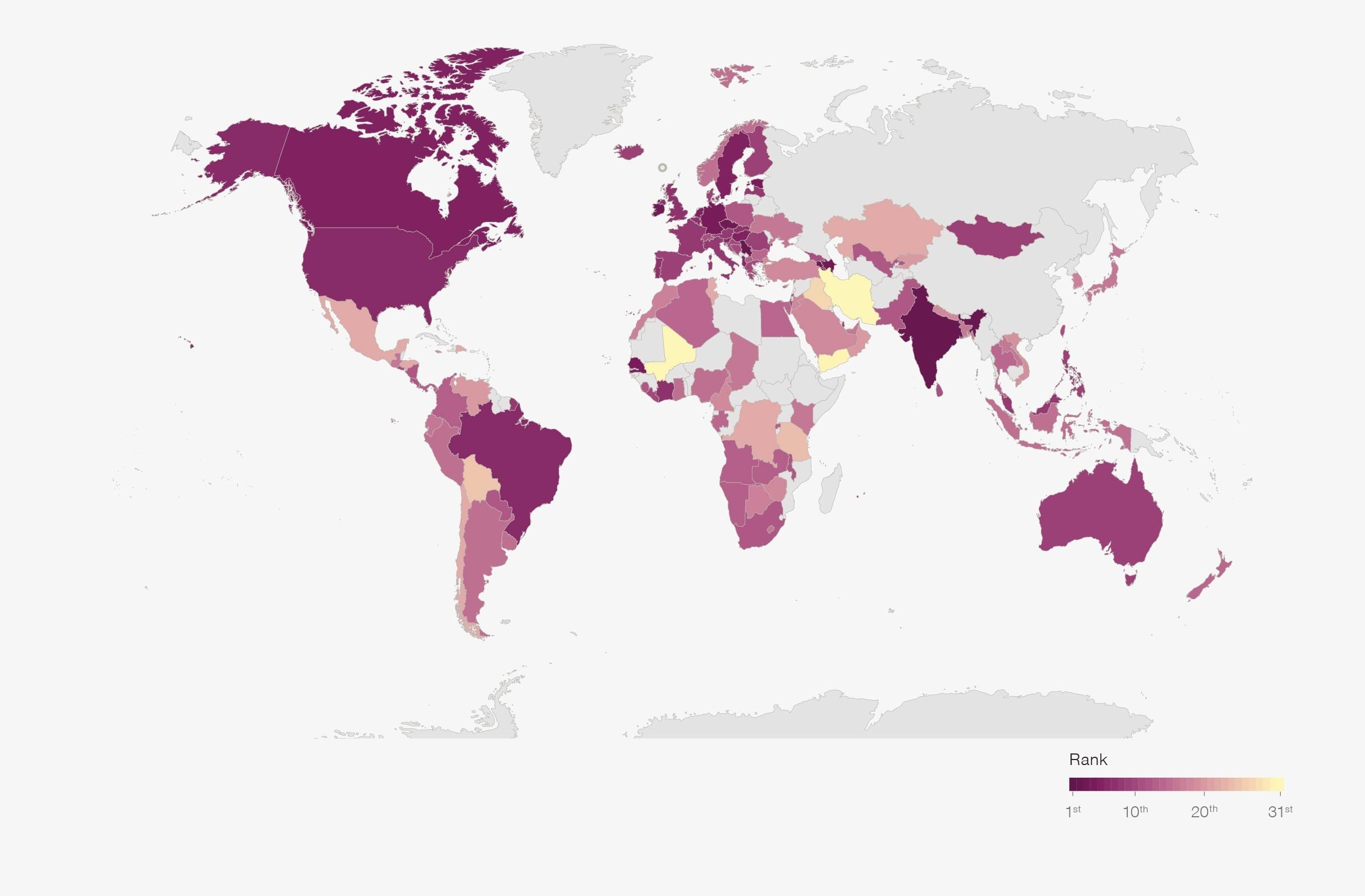

According to the report, no specific region is immune; rather, all corners of the world are at risk, and the threat is present across a diverse range of countries, including India (#1), Germany (#4), Brazil (#6) and the United States (#6).

Created and misunderstood

Apparently, the overwhelming use of social media and the rising threat of misinformation and disinformation have become defining features and a central dilemma of our time. And when you add in the fact that so many people are forming and sharing opinions without solid knowledge – thousands of times a day – it’s clear that the situation isn’t exactly encouraging. All the more reason why awareness is more important than ever.

You might recall a now-famous quote by former Google CEO Eric Schmidt: “The Internet is the first thing that humanity has built that humanity doesn’t understand, the largest experiment in anarchy that we have ever had.” Though spoken years ago, his words ring truer now than ever. The chaos he described has only deepened, and every new report and study seems to confirm his thesis. If the digital world is an experiment in anarchy, then one might ask – how much further can the disorder go?

Perhaps it’s best not to answer that question. Yet every scroll through our social media feeds, every encounter with doctored headlines or viral falsehoods, offers a clue.

So what can be done? There may be only one path forward: to reverse the current order of things and return to the basic principle of “knowledge first, opinion second.” We must learn to approach every piece of content with a kind of deliberate skepticism. In fact, we may even need to cultivate what might be called a “productive paranoia” – an active, questioning mindset that constantly asks: Is this grounded in fact? Has this been vetted? Is this opinion supported by knowledge? A little productive paranoia might just be the only way to stay sane.

This is not just a societal challenge, it’s also deeply personal. Every day, in the way we talk and the choices we make online, we shape the digital ecosystem we live in.

The ancient Roman philosopher Seneca once said, “docendo discimus” – “by teaching, we learn.” It’s a gentle reminder that understanding isn’t something we’re just given; it’s something we build, little by little, every time we read, write, speak or share. If you’ve read this, please know that these words weren’t written from a place of superiority. They come from someone still trying to make sense of things, especially in the tangled web of social media.